-

- Downloads

Removed pupdb as a dependency, using diskcache

Showing

- README.md 46 additions, 16 deletionsREADME.md

- docs/README.jinja.md 40 additions, 10 deletionsdocs/README.jinja.md

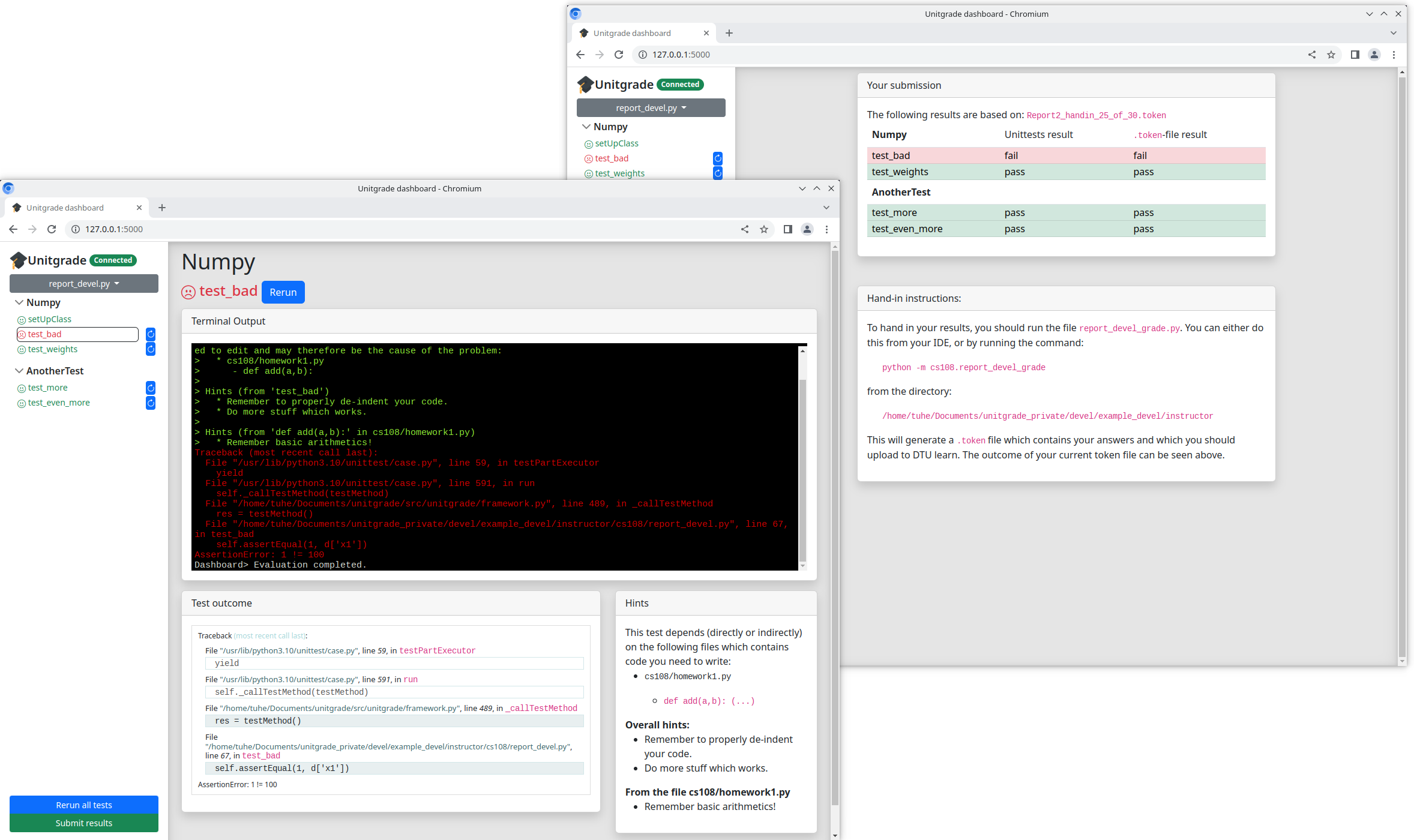

- docs/dashboard.png 0 additions, 0 deletionsdocs/dashboard.png

- docs/mkdocs.py 1 addition, 1 deletiondocs/mkdocs.py

- docs/unitgrade.bib 2 additions, 2 deletionsdocs/unitgrade.bib

- requirements.txt 1 addition, 0 deletionsrequirements.txt

- src/unitgrade/dashboard/app.py 16 additions, 112 deletionssrc/unitgrade/dashboard/app.py

- src/unitgrade/dashboard/app_helpers.py 0 additions, 1 deletionsrc/unitgrade/dashboard/app_helpers.py

- src/unitgrade/framework.py 4 additions, 48 deletionssrc/unitgrade/framework.py

- src/unitgrade/utils.py 45 additions, 3 deletionssrc/unitgrade/utils.py

docs/dashboard.png

0 → 100644

310 KiB